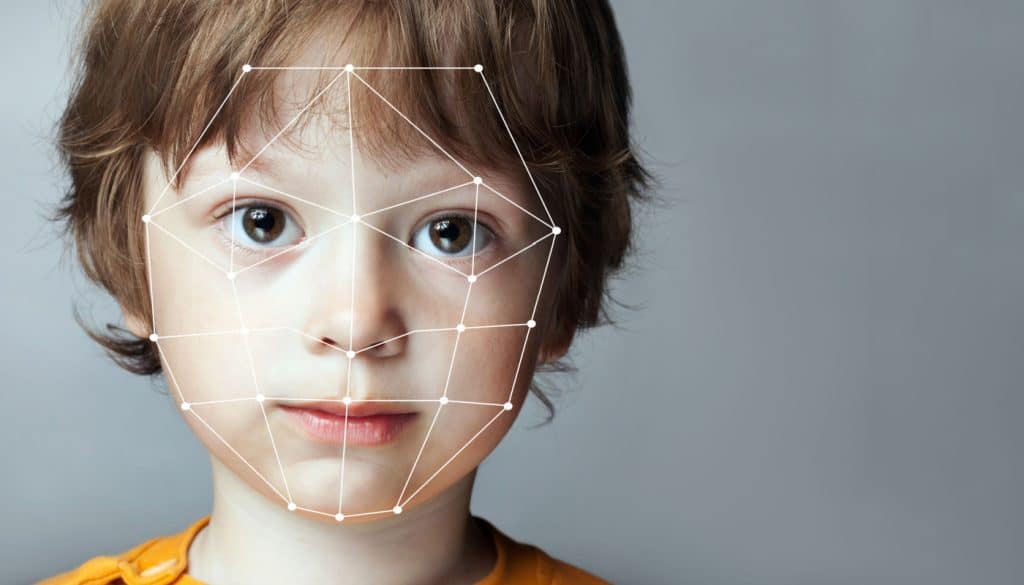

Facial Recognition Technology (FRT) has been gaining increasing attention in the UK education sector in recent years. It is being used to monitor attendance, detect security threats, and to improve the safety of students and staff.

The most widely used type of facial recognition technology is biometric authentication, which uses a person’s unique physical characteristics (fingerprints, iris scans, facial features) to determine identity. It is used to validate student and staff identities, often to track attendance.

A case from 2021 was prominent enough in the press to attract the attention of the Information Commissioners Office (ICO). It prompted them to issue a case study to highlight their concerns.

This was North Ayrshire Council’s (NAC) use of the technology to manage ‘cashless catering’ in school canteens. In a letter to NAC the ICO set out the potential data protection issues.

This was centred around the fact that the data being captured was very sensitive in nature, termed ‘special category biometric data’ within UK GDPR. The personal data captured was in the form of a facial template, which is a mathematical representation of a pupil’s facial features that would be matched with an image taken at the cash register. In addition to identifying a lawful basis for the processing of this personal data under Article 6 of the UK GDPR, there also needs to be a separate processing condition under Article 9 of the UK GDPR.

Of the 10 conditions for lawfully processing special category data in Article 9, the ICO identified that ‘explicit consent’ was the appropriate lawful basis for processing a child’s personal data in the North Ayrshire example.

However, the ICO went on to state that “NAC were unable to demonstrate that valid explicit consent was obtained as the consent statement was not specific and could apply to a broad range of processing activities.” Information on data retention was not sufficiently transparent either, and the privacy notice did not include information about the right to lodge a complaint with the ICO, if you were a parent seeking further clarification for example.

CSRB is working with an increasing number of clients looking to provide Artificial Intelligence (AI) based solutions to both the education sector and wider public sector.

In their letter to North Ayrshire Council the ICO commented: “It is imperative to decide on the lawful basis before the personal data processing begins and to be clear throughout the process about which lawful basis is being relied upon.”

CSRB provides a number of organisations with our outsourced Data Protection Officer (DPO) service, with a growing number of Artificial Intelligence (AI) and software development organisations seeking such support. This certified support provides reassurance around lawful bases for example, and allows them to demonstrate transparency and trust are at the forefront of their service offering. Additionally it allows them to market their solutions to the public sector with complete confidence that all areas of UK GDPR and other relevant UK privacy legislation have been considered, and that any potential risks to the rights and freedoms of data subjects have been mitigated.

CSRB is focused on reducing risk. Every organisation is unique, with a completely individual set of personal data requirements and risks. Engaging CSRB as your DPO guarantees you a professional who is well researched and qualified to advise you on all aspects of data privacy, information security and information governance.

While FRT has the potential to solve problems around security and attendance tracking in the education sector, schools must ensure that its use is necessary, proportionate, and compliant with UK data privacy legislation and regulations.

Schools must also be transparent and accountable in their use of FRT and address any concerns or issues that arise.

There are many more benefits to outsourcing the DPO role. Contact us to find out more.